Background

In the consumer electronics industry, high resolution has become widespread and is an expected feature that provides consumers with a better entertainment experience. Home televisions reach 4K resolution, and premium phones now have 2K screens. However, there is still a lot of content that remains in standard definition (480p): movies, documentaries, TV news channels and pictures on social media.

Traditional method vs AI-based method

Traditionally, devices upscale images with interpolation methods. New pixels are added, without much understanding of the original content using a fixed formula. Sadly, the upscaled images suffer from visual artefacts, loss of clarity or loss of texture details.

With the advent of AI, image super-resolution using deep learning can achieve superior aesthetics with a better understanding of the underlying features. This advantage is even more prominent in higher resolutions like 4K and 8K screens.

Please see the following comparison:

Diagram 1: Traditional interpolation

Using the deep learning method, we can generate pixels intelligently to make pictures look better with more details. Please see the following images for comparison.

Diagram 2: AI-based super-resolution

Problem to deploy super-resolution on the edge

TV makers have wanted super-resolution for some time, however the feasibility of achieving it was questionable due to several challenges. First, as the computing power required is vast – super-resolution was only achievable in large server environments. You hear people say, “you need 30 TOPS NPU to achieve 4K super-resolution on an edge device”. Indeed, it would require a powerful chip to apply super-resolution in a freestanding device. Second, people had little idea about how much memory bandwidth was needed and whether it was realistic at the edge at all. Third, the cost was a challenge. Some implementations use ASIC to perform AI super-resolution. These can achieve adequate performance to run super-resolution on real-time video streams, but the cost is high as the silicon is big. Additionally, although ASIC implementation is expensive - it is not versatile and can do nothing else than super-resolution.

Arm works with Imperial Vision Technology (IVT) to achieve super-resolution at the edge

IVT and Arm have partnered to optimize IVT’s super-resolution algorithm to run on Arm’s Ethos N77 and N78 NPU, achieving the best performance in the market.

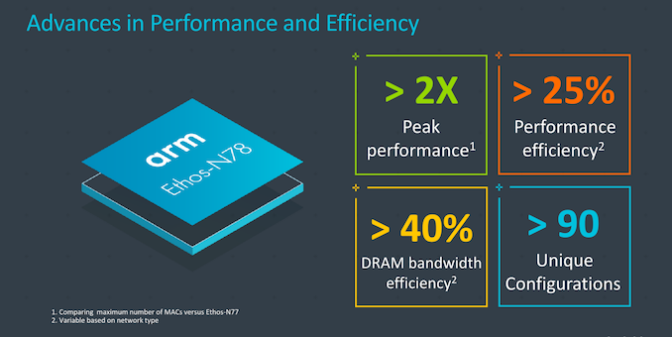

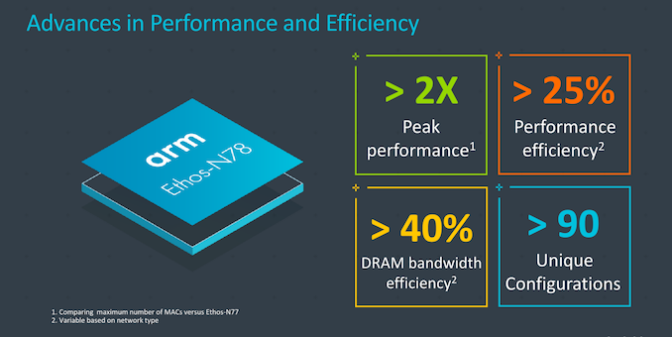

Diagram 3: Arm Ethos-N78

Arm and partners create value in their collaboration by optimizing at the “IP level”. Unfortunately, in many cases, algorithm developers need to invest a lot of time and engineering cycles to adapt to a specific chip. However, when they change hardware, they need to reinvest to adjust to a new architecture. The best way to address this issue is to work on algorithms at the “IP level”. When algorithm developers use Arm IP, they benefit from the flexibility it provides to use their previous work on any Arm IP target. This reduces overall development time and effort enabling projects to get to the market faster.

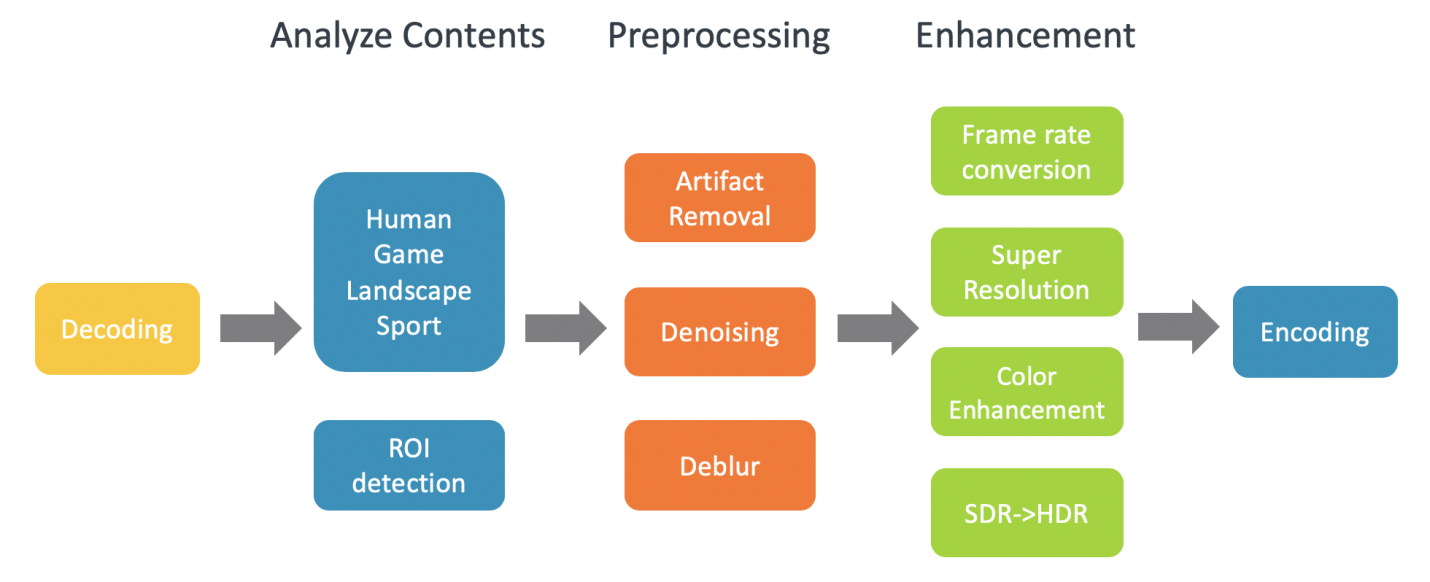

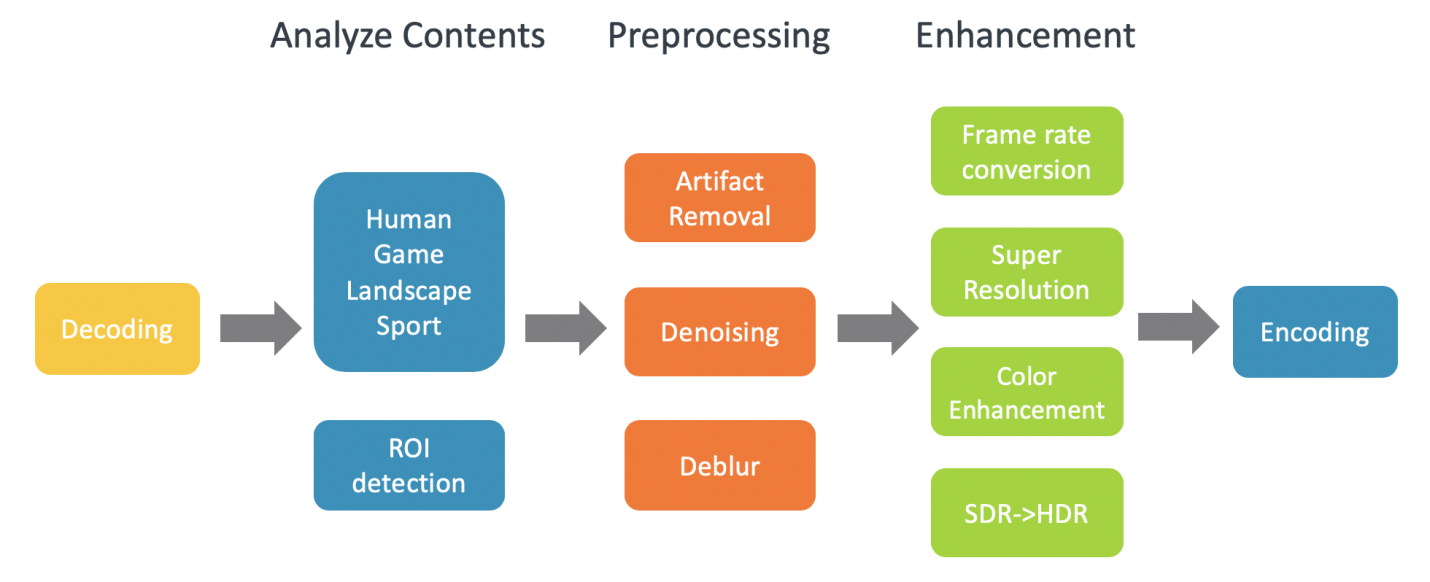

Diagram 4: Process of AI-based video restoration and enhancement

In this collaboration, we first ensured that all the computation happened on NPU. Otherwise, performance drops if individual operators instead run on CPU or GPU. Second, we ran the super-resolution model on NPU in real-time. This step includes model tuning, model conditioning, inference optimization, data compression, data flow management, data I/O utilization, compute utilization. Third, we improved the visual performance of the super-resolution model by fixing the operators and models on NPU. This step includes training with a big database of different contents and compensation for the quantization loss since the SR model runs on NPU in int8. We improved the performance iteratively using all the previous steps.

Using analyzing tools and a developer board, we reached the super-resolution targets and achieved the required performance with excellent VMAF scores. Thus, Arm NPU could support super-resolution from 720p or 1080p to 4K in real-time. We hope that the collaboration between Arm and IVT and the analysis results help to define the chipset specifications for DTV, STB, and mobile SoCs.

Live demo created and shown

We also created a live demo on an FPGA board to exhibit at events and meetings in China, Taiwan, Japan, and Korea, which has received considerable attention and feedback.

Diagram 5: Super-resolution live demo in Taiwan

.png)